In order to learn about some of the latest neural network software libraries and tools, the following is a description of a small project to build a chatbot. Given increasing popularity of chatbots and their growing usefulness, it seemed like a reasonable endeavor to build one. Nothing complicated, but enough to better understand how contemporary tools are used to do so.

This material assumes MongoDB is already installed, a Python 3.6 environment is installed and usable. Also, basic knowledge of NoSQL, machine learning, and coding skills are useful. The code and data used for this example or located at GitHub.

Requirements

Nothing complicated, just a simple experiment to play with the combinations of Tensorflow, Python, and MongoDB. The requirements are as defined:

- A chatbot needs to demonstrate a simple conversation capability.

- A limited set of coherent responses should be returned demonstrating a basic understanding of the user input.

- Define context in a conversation, reducing the number of possible responses to be more contextually relevant.

Design

Use MongoDB to store documents containing

- a defined classification or name of user input. this is the intent of the input/response interaction

- a list of possible responses to send back to the user

- a context value of the intent used to guide or filter which response lists makes sense to return

- a set of patterns of potential user input. the patterns are used to build the model that will predict the probabilities of intent classifications used to determine responses.

A utility will be implemented to build models from the database content. The model will be loaded by a simple chatbot framework. Execution of the framework allows a user to chat with the bot.

The chatbot framework loads a prebuilt predictive model and connects to MongoDB to retrieve documents which contain possible responses and context information. It also drives a simple command line interface to:

- capture user input

- process the input and predict an intent category using the loaded model

- randomly pick a response from the intent document

In the database, each document contains a structure including:

- the name of the intent

- a list of sentence patterns used to build the predictive model

- a list of potential responses associated with the intent

- a value indicating the context used to filter the number of intents used for a response (contextSet – to define the context; contextFilter – used to filter out unrelated intents)

For example, the ‘greeting’ intent document in the MongoDB is defined as:

{

"_id" : ObjectId("5a160efe21b6d52b1bd58ce5"),

"name" : "greeting",

"patterns" : [ "Hi", "How are you", "Is anyone there?", "Hello", "Good day" ],

"responses" : [ "Hi, thanks for visiting", "Hi there, how can I help?", "Hello", "Hey" ],

"contextSet" : ""

}

Implementation

To build the model used to predict possible responses, the patterns sentences are used. Patterns are grouped into intents. Basically meaning, the sentence refers to a conversational context. The MongoDB is populated with a number of documents following the structure shown above.

This example uses a number of documents to talk about AI. To populate the database with content for the model, from a mongo prompt:

> use Intents

> db.ai_intents.insert({

"name" : "greeting",

"patterns" : [ "Hi", "How are you", "Is anyone there?", "Hello", "Good day" ],

"responses" : [ "Hi, thanks for visiting", "Hi there, how can I help?", "Hello", "Hey" ],

"contextSet" : ""

})

The other documents are inserted in the same way. Various tools such as NoSQLBooster are useful when working with MongoDB databases.

There is a mongodump export of the documents used in this example in the GitHub repository.

Building the Prediction Model

The first part of building the model is to read the data out of the database. The PyMongo library is used throughout this code.

# connect to db and read in intent collection

client = MongoClient('mongodb://localhost:27017/')

db = client.Intents

ai_intents = db.ai_intents

The ai_intents variable references the document collection. Next, parse information into arrays of all stemmed words, intent classifications, and documents with words for a pattern tagged with the classification (intent) name. A tokenizer is used to strip out punctuation. Each document from the ai_intents collection in the database is extracted into a cursor using ai_intents.find().

words = []

classes = []

documents = []

# tokenizer will parse words and leave out punctuation

tokenizer = RegexpTokenizer("[\w']+")

# loop through each pattern for each intent

for intent in ai_intents.find():

for pattern in intent['patterns']:

tokens = tokenizer.tokenize(pattern) # tokenize pattern

words.extend(tokens) # add tokens to list

# add tokens to document for specified intent

documents.append((tokens, intent['name']))

# add intent name to classes list

if intent['name'] not in classes:

classes.append(intent['name'])

From this categorized information, a training set can be generated. The final data_set variable will contain a bag of words and the array indicating which intent it belongs to. The bag for each pattern has words identified (flagged as a 1) in the array. The output_row identifies which intent’s pattern documents are being evaluated.

for document in documents:

bag = []

# stem the pattern words for each document element

pattern_words = document[0]

pattern_words = [stemmer.stem(word.lower()) for word in pattern_words]

# create a bag of words array

for w in words:

bag.append(1) if w in pattern_words else bag.append(0)

# output is a '0' for each intent and '1' for current intent

output_row = list(output_empty)

output_row[classes.index(document[1])] = 1

data_set.append([bag, output_row])

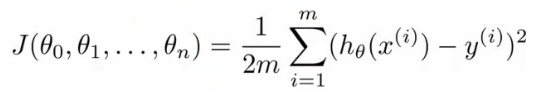

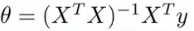

The last part is to create the model. With Tensorflow and TFLearn, it is simple to create a basic deep neural network and evaluate the data set to create a predictive model from the sentence patterns defined in the intent documents. TFLearn uses numpy arrays, so the data_set array needs to be converted to the numpy array. Then the data_set is partitioned into the input data array and possible outcome arrays for each input.

data_set = np.array(data_set) # create training and test lists train_x = list(data_set[:,0]) train_y = list(data_set[:,1])

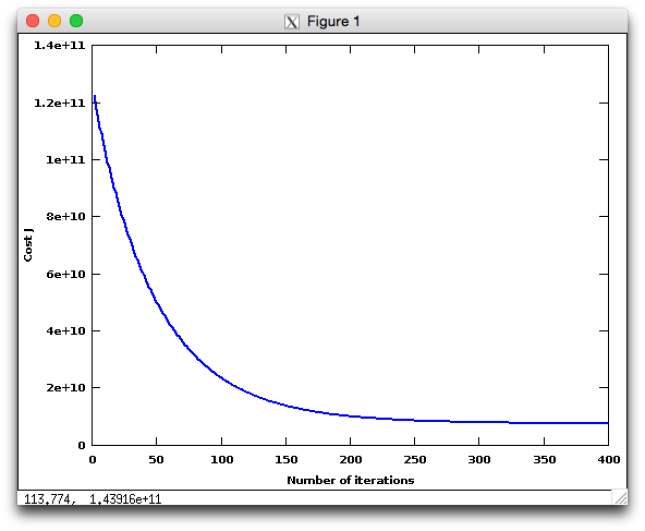

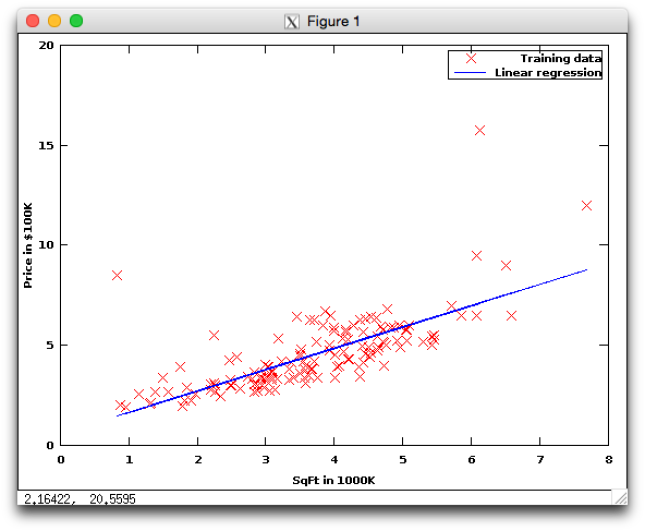

Defining the neural network is done by setting its shape and the number of layers. Also defined is the algorithm to fit the model. In this case, regression. The predictive model is produced by TFLearn and Tensorflow using a Deep Neural Network, using the defined training data. Then save (using pickle) the model, words, classes and training data for use by the chatbot framework.

# Build neural network

net = tflearn.input_data(shape=[None, len(train_x[0])])

net = tflearn.fully_connected(net, 8)

net = tflearn.fully_connected(net, 8)

net = tflearn.fully_connected(net, len(train_y[0]), activation='softmax')

net = tflearn.regression(net)

# Define model and setup tensorboard

model = tflearn.DNN(net, tensorboard_dir='tflearn_logs')

# Start training (apply gradient descent algorithm)

model.fit(train_x, train_y, n_epoch=1000, batch_size=8, show_metric=True)

model.save('model.tflearn')

pickle.dump( {'words':words, 'classes':classes, 'train_x':train_x, 'train_y':train_y}, open( "training_data", "wb" ) )

Running the above code reads the intent documents, builds a predictive model and saves all the information to be loaded by the chatbot framework.

Building the Chatbot Framework

The flow of execution for the chatbot frameworks is:

- load training data generated during the model building

- build a neural net matching the size and shape of the one used to build the model

- load the predictive model into the network

- prompt the user for input to interact with the chatbot

- for each user input, classify which intent it belongs to and pick a random response for that intent

Code for the chatbot driver is simple. Since the amount of data being used in this example is small, it is loaded into memory. An infinite loop is started to prompt a user for input to start the dialog.

# connect to mongodb and set the Intents database for use

client = MongoClient('mongodb://localhost:27017/')

db = client.Intents

model = load_model()

prompt_user()

The model created previously is loaded with a simple neural network defining the same dimensions used to create the model. It is now ready for use to classify input from a user.

The user input is analyzed to classify which intent it likely belongs to. The intent is then used to select a response belonging to the intent. The response is displayed back to the user. In order to perform the classification, the user input is:

clean_up_sentence function

- tokenized into an array of words

- each word in the array is stemmed to match stemming done in model building

bow function

- create an array the size of the word array loaded in from the model. it contains all the words used in the model

- from the cleaned up sentence, assign a 1 to each bag of words array element that matches a word from the model

- convert the array to numpy format

classify function

- using the bag of words, use the model to predict which intents are likely needed for a response

- with a defined threshold value, eliminate possibilities below a percentage likelihood

- sort the result in descending probability

- the result is an array of (intent name, probability) pairs

A sample result with the debug switch set to true may look like

enter> what is AI

[('AIdefinition', 0.9875145)]

response function

The response function gets the list of possible classifications. The core of the logic is two lines of code to find the document in the ai_intents collection matching the name of the classification. If a document is found, randomly select a response from the set of possible responses and return it to the user.

doc = db.ai_intents.find_one({'name': results[0][0]})

return print(random.choice(doc['responses']))

The additional logic in this function handles context about what the user asked to filter possible responses. In this example, each document has either a contextSet or contextFilter field. If the document retrieved from the database contains a contextSet value, the value should set for the current user. A userId is added to the context dictionary with the value of the entry set to the contextSet value.

if 'contextSet' in doc and doc['contextSet']:

if debug: print('contextSet=', doc['contextSet'])

context[userID] = doc['contextSet']

Before querying for a document based on a classification found the response function checks if a userID exists in the context. If it does, the query includes searching with the context string to match a document containing a contextFilter field with a matching context value.

if userID in context:

doc = db.ai_intents.find_one({'$and':[{'name': results[0][0]},{'contextFilter' : { '$exists': True, '$eq': context[userID] }}]})

del context[userID]

After finding the document, the context is no longer needed and removed from the dictionary.

Usage

Since this a simple example for experimentation, the dialog with the chatbot will certainly not be intelligent. But, the constructs and basic logic provide a basis for learning and can be expanded upon.

A sample dialog with this implementation may go like the following:

enter> hello Hi there, how can I help? enter> what do you know I can talk about AI or cats. Which do you want to chat about? enter> AI OK. Let's talk about AI. enter> what is AI AI stands for artificial intelligence. It is intelligence displayed by machines, in contrast with the natural intelligence displayed by humans and other animals. enter> when will AI take my job AI is artificial intelligence that is evolving to become smart robot overlords who will dominate humans. enter> I want to talk about something else I can talk about AI or cats. Which do you want to chat about? enter> cats OK. Let's talk about cats. enter> quit